AI in Helix: Lightweight Integration with Macros

The Helix editor has no built in AI support. Current approaches utilize Helix' LSP integration by launching an AI focused LSP. With Macro Keybindings, there is a new way.

I write code in Helix, my favorite editor. The lack of AI integration never bothered me—I often find AI features in editors more distracting than useful. Instead, I use AI explicitly in a separate window. When I need to discuss parts of my codebase or make changes, I prefer this deliberate approach: iterating on suggestions until I’m satisfied, then applying them myself.

AI-powered editors do have a point, however.

They reduce the distance between your codebase and the AI both for adding context to your queries and applying edits directly to your code. There are times when I want something small. In these cases, copy-pasting feels like unnecessary friction. Utilizing Helix’ LSP integration by launching an AI LSP feels too heavy for me.

But there is another way. Helix recently introduced macro keybindings, and combined with its pipe operator, you can create useful AI shortcuts. Let me show you how:

Writing a Pipe Compatible Node Script

The pipe operator | passes the selection line by line to the standard input of a shell command.

With NodeJS’ standard library you can read these lines and write to the output using console.log().

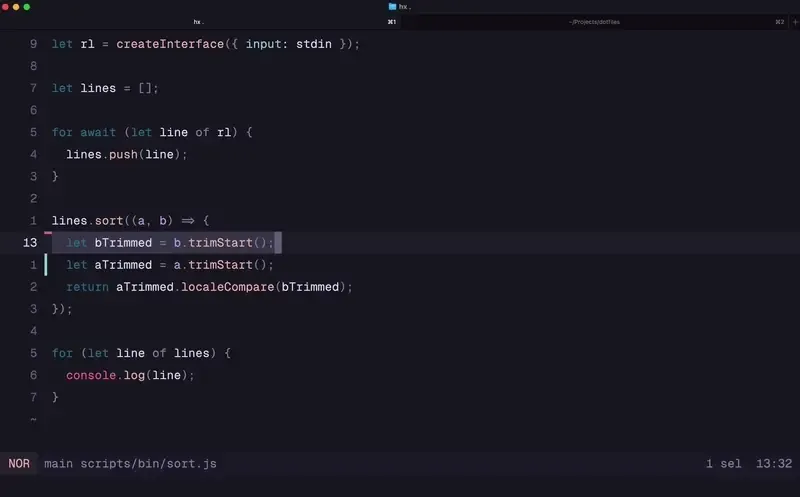

Below is a small utility I use for sorting lines alphabetically.

import { createInterface } from "node:readline"import { stdin } from "node:process"

let rl = createInterface({ input: stdin })

let lines = []

for await (let line of rl) { lines.push(line)}

lines.sort((a, b) => { let aTrimmed = a.trimStart() let bTrimmed = b.trimStart() return aTrimmed.localeCompare(bTrimmed)})

for (let line of lines) { console.log(line)}

You can use this script to sort the lines of a selection in Helix by pressing | and typing node path/to/sort.js.

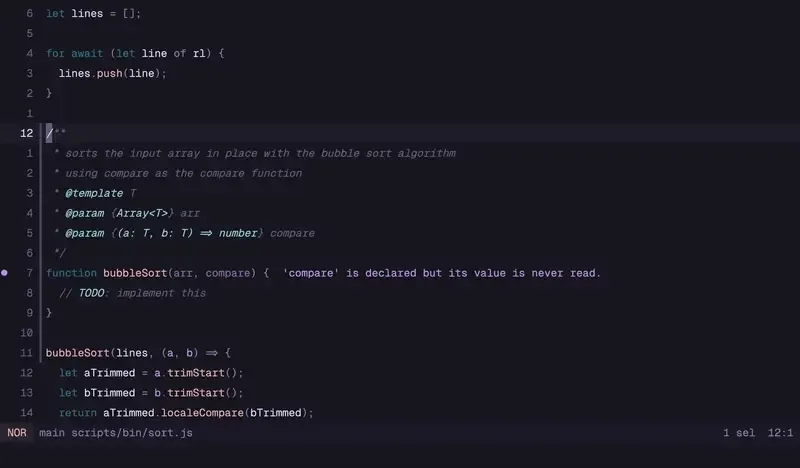

Writing an AI Script in Node

Vercel has created a vendor agnostic SDK for building AI systems with TypeScript. Calling a model to generate text is as simple as:

import { generateText } from "ai"

const { text } = await generateText({ model: yourModel, prompt: "Write a blog post about using AI in Helix.",})They provide adapters for various models that can be used in place of yourModel.

For AI code modification I take the following approach:

- read lines from

stdin - read files provided by

-f path/to/file.ts - construct the prompt

- base instructions for the model: coding style

- instructions for the current task: directly replacing input text

- include additional files for context

- provide the actual text that should be replaced

- clean up the result

Cleaning up the result means extracting the actual code from the response. Models are trained to return markdown, so it’s likely that the response includes at least markdown code fences.

let input = await readLinesFromStdin()

let files = await readFilesFromArgs()

let aiResponse = await generateText({ model: google("gemini-2.0-flash"), prompt: `## Base instructionsblablabla you're an experienced programmer blabla

## Task descriptionYou'll receive input that needs to be relaced. Treat TODO comments as instructions. Add AI comments for explaining your approach or answering questions.

## Files for context:${files.map(({ path, file }) => `### File: ${path}\n${file}\n`).join("")}

## Input to be replaced:${input}

## Final NoteYour response will replace the input. Only respond with the code.`,})

console.log(cleanup(aiResponse.text))You can call this script by selecting text and typing | node path/to/ai.js -f path/to/current/file.ts.

Optionally, you can add more files to the prompt.

Fortunately, Helix has a shortcut for adding the current file’s path: <C-r>%.

See the full script in my dotfiles.

Creating AI Shortcuts with Macros

I registered a custom function in zsh that executed my ai.js script.

function ccssmnn-ai() { node ~/Developer/dotfiles/scripts/bin/ai.js "$@"}What’s left now is setting up the macro itself.

[keys.normal.L]a = "@|ccssmnn-ai<space>-f<space><C-r>%<ret>"A = "@|ccssmnn-ai<space>-f<space><C-r>%"@ starts the keybinding macro. All following keys act like they were pressed with the editor open. In this case:

- Trigger the pipe command

| - Type the shell command

ccssmnn-ai - Type

<space>-f<space><C-r>%to provide the current document path as an argument - Press return

<ret>to execute the script, sending the selection as standard input.

I created both a shortcut that executes directly and another one that allows me to add more files to the prompt.

Is Helix an AI IDE now?

With this little trick, I can add lightweight AI support without leaving the editor.

It can explain code to me when typing // TODO: can you explain this to me? and hitting xLa (select line, trigger macro).

It can also implement functions. Just type // TODO: suggest an implementation into the body and hit mamxLa (select around brackets, extend to the lines, trigger macro).

With this approach you control what the AI can do. The AI SDK supports tool calling. Why not allow it to ask for more files? What about including project specific rules? I like how I can choose the model, the prompt, the boundaries, and build AI into Helix the way I want. Don’t wait for someone else to build it.

And whenever I’m not satisfied with the immediate result, I can just hit u to undo the change and continue using the conversational approach.